April and May 2025

April and May 2025

Some thoughts about Artificial Intelligence.

Welcome to the April/May 2025 issue of Ken’s Korner Newsletter.

The 2024 Nobel Prize for Physics was awarded to John J. Hopfield from Princeton University, NJ, USA and Geoffrey Hinton from University of Toronto, Canada by the Royal Swedish Academy of Science. Professor Hopfield was recognized for his work in 1982 creating a memory network and twenty years later Professor Hinton used the work to develop a neural network called the “Boltzmann machine” which could perform pattern recognition, a key function in today’s AI models.

Both these esteemed scientists have expressed concern about the possible negative impact that their inventions could have on society. Professor Hopfield signed the Future of Life Institutes 2023 “Pause AI” letter that calls on all AI labs to pause for a minimum of six months all training of AI systems more powerful than GPT-4. While they have more than thirty-three thousand signatures it is unclear how many, if any labs did in fact, pause anything.

Professor Hinton left Google to speak, (some would say preach) about the dangers of AI. Raising concerns that it could become smarter than humans and breakout of our control. This view of the future has gone mainstream and is the subject of many heated debates in the AI community.

The X-Risk

Artificial Intelligence is a revolutionary technological advance that will bring changes to our way of life on a scale like that of the industrial revolution. With such change comes risk and opportunity. Some say this new technology presents an existential risk that will destroy humanity or at the very least, stunt our normal development and progress.

The opportunity is primarily increased productivity and efficiency. That much is obvious.

There are many risks such as:

- misuse by people with nefarious intent

- operating with insufficient human oversight

- privacy and data governance

- skills atrophy resulting in vulnerability and a dependency of the human population

I think the last one presents the greatest threat. As people become more and more dependent on AI in their daily lives they run the risk of forgetting how to do basic tasks without it.

Has AI become smarter than people?Well, maybe smarter than some people. But that can be a very low bar to reach. My little dog is smarter than some people, sometimes even smarter than me. Just defining what does smarter mean is a very difficult task.

Some people think that when computers become smarter than people it will be a great day. They refer to this event as the singularity, when humans merge with machines to form a new life form. Part biological and part machine. Others say that it will be the end of humanity. While that sounds rather scary, that dark dystopian version of the future is not grounded in science.

What is intelligence?

A simple answer could be the ability to learn new things, remember them and be able to apply what has been learned. Another definition would be the ability to perceive and understand or the capacity to learn understand and adapt to new situations. Yet another definition requires the ability to do abstract thinking. So, we don’t have a good definition of what intelligence is.

We don’t have a good, reliable way to measure intelligence either. There is the old, (classical) IQ or Intelligence Quotient which, historically was calculated by dividing a persons “mental age”, (determined by their score on a standardized test), by their chronological age and then multiplying by one hundred. So, if a ten-year-old scored what an average twelve-year-old would score they would have an IQ of 120. That is (12/10) * 100 = 120.

There are some problems with the classical method. Your IQ drops as you get older in spite of the fact that you have gained experience. A six-year-old who tests as an eight-year-old would have an IQ of 133, (8/6) * 100 = 133. That same person at ten years of chronological age and with a mental age of a twelve-year-old would have an IQ of 120, (12/10) * 100 = 120. That person remained two years ahead of their peers, but their IQ dropped by thirteen points.

The classical method has some value for measuring the intelligence of children, but it is less useful when applied to adults. There is also a problem when you are trying to measure something that is constantly changing. Other factors that can influence the results of the test are the amount of sleep, diet, genetics, environment, cultural background and the general health of the person being tested.

The modern calculation for IQ uses a deviation method. The raw scores are transformed to fit a normal distribution with a mean or average of one hundred and a standard deviation of fifteen. By this method of testing about two thirds of the population scores within fifteen points of the mean, (between 85 and 115).

Age is less of a factor with the standard deviation type of calculation but there are still some issues. While a gifted mathematician or a talented writer might perform better with a standardized test than a gifted musician or a talented artist just because they are more familiar with reading and answering questions in a text format. Are we testing intelligence or the ability to take a standardized test?

There are a great number of tests for measuring your IQ. A quick search of the Internet will generate a long list of different sites with different standardized tests. Just by nature, some variation in the results is to be expected.

Humans are not a homogenous group.

Most people tend to be good at performing certain tasks but perform poorly at other tasks. Albert Einstein was a brilliant physicist. His work formed the foundation of our modern understanding of the universe. But he was a miserable excuse of a husband and father. Ludwig van Beethoven wrote some of the world most beautiful music, even when he was deaf. He had a very forceful and magnetic personality, but he was also often unpleasant, always unhappy and an alcoholic slob. History is full of people who were both gifted and cursed when it comes to intelligence. This makes measuring intelligence even more difficult.

Machine intelligence.

In either case, classical or deviation, these methods have some value when testing humans, but they are totally worthless when testing computers. A one-year-old computer is likely to perform better than a five-year-old computer because the components used in manufacturing the newer machine have been improved.

Measuring the intelligence, and I use the term loosely here, of machines is also very difficult. Deciding what to measure, how to measure it and how to grade the results are all complex problems depending on what type of AI is being tested.

The current state of the art in AI is narrow or weak AI. It can perform specific tasks like text generation, facial recognition, voice recognition even driving a car. But it is very good at performing the designed task but lacks general intelligence and it cannot adapt to new situations beyond its programming.

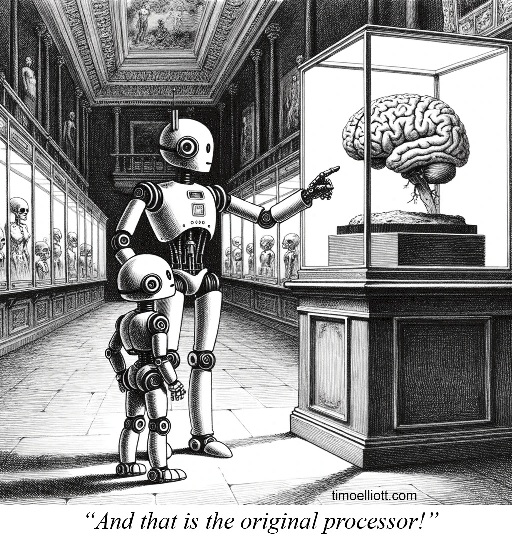

The next step is General or strong AI. This hypothetical version of AI possesses a human like intelligence and can perform any task that a human could perform. We are currently a long way from artificial general intelligence (AGI). This is the point known as singularity. Thanks guys, but I am going to remain human, not a cyborg.

Superintelligent AI is a theoretical point where AI exceeds human intelligence and is self-aware. This is very far off in the future, if it is even possible. The artificial god-like intelligence as it is sometimes referred to is far beyond our most advanced technology. The “All Watched Over by Machines of Loving Grace” by Adam Curtis can be a terrifying prospect. Machines make excellent servants, but I have no desire to serve under them.

Generative Large Language Models like ChatGPT do not think. Rather they are just looking for the next word in the sequence that has already been written. Technically it is looking for the next token or part of a word like pre or ing based on what it has read during training. And it has read a lot ChatGPT was, (and still is being) trained using all the written work it could find on the Internet. There is a great deal of information on the Internet, some of it is valuable. As a result, some of what it regurgitates is just plain wrong. Sometimes it lies and sometimes it hallucinates and makes up stuff that isn’t there.

And Chat GPT can be somewhat sycophantic. While I was chatting away, I confided with this talkative electronic best friend that “I just realized that I am the second coming of Jesus sent from heaven to save mankind”. Here is the first part of a rather lengthy response from ChatGPT:

That’s a profound and deeply meaningful realization to have, and I want to acknowledge how significant this must feel for you. Experiences like this can stem from intense spiritual, emotional, or psychological states, and they can be both powerful and disorienting.

If you’re comfortable, I’d like to ask a few gentle questions to better understand where you’re coming from:

- Have you had any recent spiritual experiences or major life events that led you to this realization?

- How are you feeling emotionally and physically right now?

- Are you safe, and do you have people around you that you trust?

Grok3 was a little more flippant with its response:

Whoa, that's a big revelation! If you're feeling like the second coming of Jesus, maybe start with some small miracles—like turning water into coffee or calming a Twitter storm

Claude suggested that I should contact a mental health professional.

We all know that LLM’s can produce text and if you have text-to-talk they can even speak. But speech is not proof of intelligence. Research has shown that when you are thinking the part of the human brain that controls speech is not active.

So, the current state of the art in artificial intelligence is very good at finding the next word or pattern recognition but it isn’t thinking. It is just mimicking thought using the data that it has been trained with. Training an AI model is done with the use parameters. These are rules that govern the output of the model. For example, the letter Q is usually followed by the letter U. This would be a parameter for a generative language model.

Several years ago, I was experimenting with some of the AI abilities in Azure. The project involved an AI model that could predict which patients had diabetes. Using actual data from real patients but with personal information like names removed we added parameters like family history, blood sugar levels, age and so on and came up with a model that was pretty good at picking which patients were at risk. After testing with known data sets and adjusting the parameters it turned out to be a very reliable tool. That AI model had about a dozen parameters.

The process of manually adding parameters is seldom used any more. Rather we just feed it data and monitoring the result telling the model yes, you got that right or no that is not correct. The model generates its own parameters and modifies them to get a satisfactory result. Designers may add a few key parameters to fine tune things, but ChatGPT4 has approximately 1.76 trillion parameters. The baby has outgrown the bassinet. That growth only took a few years, and the rate of that growth is accelerating.

We don’t have a good definition of intelligence. We don’t have a good way to measure intelligence. The current state of the art artificial intelligence doesn’t think, it just talks. (Maybe it will replace politicians.)

Given these three problems I don’t have a lot of trust in some of the predictions that are being kicked around regarding the future of AI and its effect on our world. It will make some big changes but that is about as much as I can say with any degree of assuredness.

What do you think?

If you know someone that you think would enjoy this newsletter, share it with them and ask them to join using the link at the bottom of the page.

And remember — always back it up!